Pretrained Speech Encoders and Efficient Fine-tuning Methods for Speech Translation: UPC at IWSLT 2022

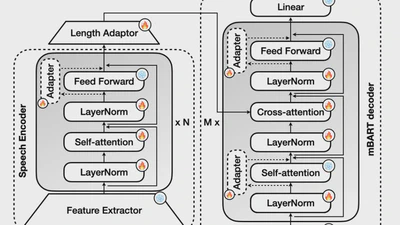

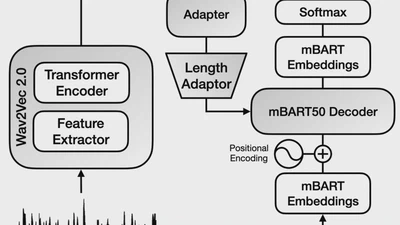

Our submission to the IWSLT 2022 shared task details an end-to-end speech translation system built on large pretrained models. We leverage efficient fine-tuning techniques like …